David Sweet

VirtualCamera

|

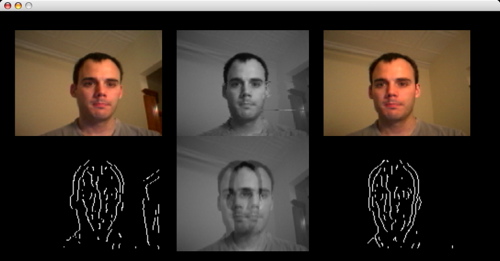

| Fig 1., clockwise from upper left: (i) right camera image, (ii) real-time, 30fps, synthetic, virtual camera image, (iii) left camera image, (iv) features detected in left image, (v) superposition of left and right images, (vi) features detected in right image |

This application, VirtualCamera, uses StereoVideoLib to demonstrate a stereo video view synthesis algorithm.

Download VirtualCamera: virtualcamera20060731.tgz

Note: You will need to have two cameras connected to your Mac. I use two external, Firewire iSight cameras. If they aren't connected the application will die without an error message.

Real-Time Stereo Video View Synthesis

I develop an algorithm that combines video streams from a pair of cameras into a single output video that appears to have been captured by a third, "virtual", camera. The objective is to create the cleanest, most accurate output video given the constraint that the algorithm run at 30 frames/second on a personal computer and leave ample resources available for other tasks (ex., encoding and transmitting the video).

My interest in this project stems from a desire to have a true "face-to-face" video conference (or video chat). Typically when video chatting (ex., with Apple's iChat) you'll see a view of the other party's forehead, as depicted in the images on Apple's iChat page. This is a result of the fact that the other party's camera is placed above their monitor. To see your image, they will need to direct their head and eyes downward from the camera toward the monitor. As a result, the camera captures -- and, thus, you see -- an image of a downward-tilted head with downward-pointing eyes.

To have a face-to-face conversation would require the camera to be placed directly behind the monitor. This is typically physically impossible (but maybe not forever), so one seeks an approximate solution. One such solution is to use view synthesis to create a virtual camera. (Seitz1995, Criminisi2003)

Fig. 1, above, shows an image from such a virtual camera (top row, center). Notice how the subject appears to be facing the camera. The source images (top row, right and left), however, were captured by cameras placed to the left and to the right of the subject. Fig. 1 is a screenshot of the VirtualCamera application running on a MacBook Pro. We will refer to the algorithm that generates these virtual views as VC. It is the algorithm under development here.

The application also implements a second algorithm, which we will call DP, that uses dynamic programming. Algorithms like DP have been studied and written about extensively (Ohta1985, Criminisi2003). This implementation is simplistic compared to the state of the art dynamic programming algorithms. It is included here only for comparison to VC. [For the curious: The dynamic programming algorithm used in view synthesis is an elaboration of dynamic time warping.]

While the application is running, use <CTRL>-<OPTION>-<C> to switch between VC and DP. Note the following:

- VC is much faster than DP. The simplicity of DP (this implementation of a dynamic programming algorithm) implies, in part, that it cannot be made to run much faster than it does now. In fact, VC is more efficient w.r.t. to the resolution of the image than DP. (N.B., neither algorithm is optimized with MMX, SSE, the GPU, etc.)

- VC runs at 30fps with enough CPU cycles left over to watch a video in iTunes:

- VC is symmetric with respect to the cameras, i.e., it produces the same result when the input streams are swapped. [DP can not because, as is common in the literature, explicit use is made of a requirement on the ordering of the cameras to nearly double the speed of the algorithm.]

- VC works when the cameras are fronto-parallel as well as when the cameras converge (i.e., point inward toward the subject) even though no image rectification is performed.

- The images (for both algorithms) show spatial and temporal processing artifacts and errors.

- You will need to vertically align the cameras by hand. (It might be possible to automatically vertically align the images in a preprocessing step.)

References:

- (Ohta1985) Y. Ohta and T. Kanade, Stereo by Intra- and Inter-Scanline Search Using Dynamic Programming, IEEE Trans. Pattern Analysis and Machine Intelligence, Vol. PAMI-7, No. 2, 1985, 139-154.

- (Seitz1995) S. Seitz and C. Dyer, Physically-Valid View Synthesis by Image Interpolation, Proc. Workshop on Representation of Visual Scenes, 1995, 18-25.

- (Criminisi2003) A. Criminisi, J. Shotton, A. Blake, C. Rother, and P.H.S. Torr, Efficient Dense-Stereo and Novel-view Synthesis for Gaze Manipulation in One-to-one Teleconferencing.